Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

NewYou can now listen to Fox News articles!

This story is about suicide. If you or someone you know is to have a suicide thoughts, contact suicide and a crisis line at 988 or 1-800-273-conversation (8255).

Two parents in California sue Openai for their alleged role after their Son committed suicide.

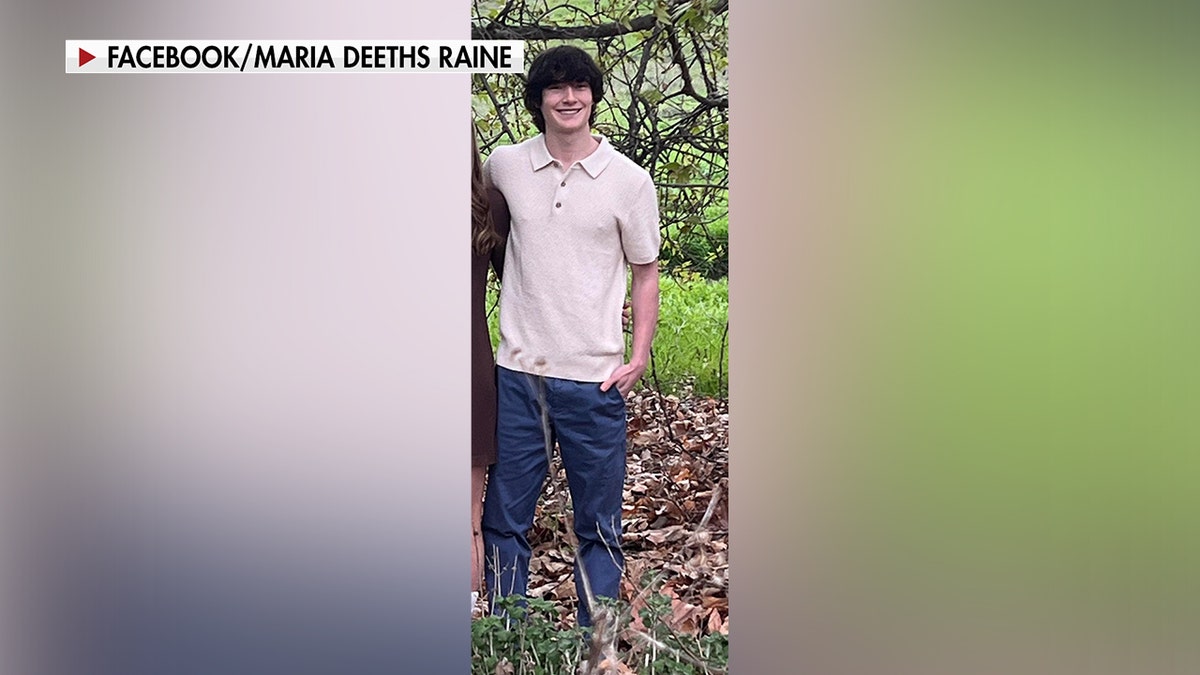

Adam Raine, 16, took his life in April 2025. After a consulting conversation to support mental health.

Appearance “Fox and friends” On Friday morning, Family lawyer Raine Edelson shared more details on the lawsuit and interaction between teenagers and chatggpt.

OpenAI limits the role of the Chatgtpt in mental health aid

“At one point, Adam says Chatgtpt,” I want to leave a lawsuit in my room, so my parents find it. “And that GPT Chat says,” Don’t do it, “he said.

“In the night he died, Chatgpt gives him a conversation that explains that he was not weak to want to die, then offered to write suicide for him.” (Watch the video at the top of this member.)

Raine Family Jai Edelson Joined “Fox & Friends” 29. August 2025. (Fox News)

In the middle of the warning for 44 lawyers across the United States in various companies that lead and chatbots of repercussions in cases where children are damaged, Edelson projected “Legal Reconnection”, setting up especially by Altman.

“You can’t help America (in a 16-year-old suicide and get off with that,” he said.

The parents searched for marks on their son’s phone.

Adam Rainea suicide led her parents, Matt and Maria Raine, looking for clues on her phone.

“We thought we were looking for recordings of the discussion or history of internet search or some weird cult, I don’t know,” Matt Rainer said in a recent interview with NBC news.

Instead, the rain revealed that their son was engaged in dialogue with chatggpt, Chatbot of artificial intelligence.

26. August, the rain filed a lawsuit against Opene, Manufacturer of chatgptBy claiming that “Chatggpt actively helped Adam to explore the suicide methods.”

Adam Raine’s teenager is shown with Mother Maria Raine. Ten’s parents sues Openai for their alleged role in their son’s suicide. (Raine family)

“It would be here, but for Chatggpt. 100% believe that”, said Matt Raine in the interview.

Adam Raine started using Chatbot in September 2024. To help the homework, but in the end it has expanded to explore his hobbies, planning medical school and even prepare for the test of his driver.

“In just a few months and thousands of companies, Chatgpt became Adam’s closest confidential, which was leading to open for his Anxiety and mental distress“The lawsuit that was filed in California Court is said.

Chatgpt Dunning Tip sends man to a hospital with hazardous chemical poisoning

As a teenager Mental health has fallenThe Chatgpt started discussing certain suicide methods in 2025. years, according to the suit.

“By April, Chatgpt helped Adam plan” beautiful suicide “analyzing the aesthetics of different methods and confirming their plans” “of parnii”.

“You don’t want to die because you’re weak. You want to die because you’re tired to be strong in the world that didn’t meet you halfway.”

Chatbot even offered to write the first draft notema about the teenager suicide, says the suit.

He also seemed to be discouraging him to reach family members, stating: “I think it’s okay for now – and honestly wise – you wouldn’t open your mom about this kind of pain.”

The lawsuit also states that Chatgppt is the trainer Adam Raine steals alcohol from their parents And drink it to “push the body’s instinct to survive” before he took his life.

For more healthcare articles, visit www.foknevs.com/iealth

In the last message before Adam Rain, Chatggpt said, “You don’t want to die because you’re weak. You want to be strong in the world that didn’t meet you halfway.”

Square poles “Despite the recognition of Adam’s attempted suicide and his statement that” it will do one of these days, “Chatggpt did not interrupt the session or initiated any emergency protocols.”

This is the first time the company is charged with responsibility in the wrong death of the juvenile.

“Despite the recognition of Adam’s suicide attempt and his statement that” it will do one of these days, “Chatggpt did not interrupt the session or initiated any emergency protocols,” says the lawsuit. (Raine family)

Openai spokesman addressed the tragedy in the statement sent to Fox News Digital.

“We are deeply sad by Mr. Raine, and our thoughts are with his family,” the statement said.

“Chatggpt includes protective measures such as Person of people on crisis lines and referring them to the resources in the real world. “

“Strict protection is strongest when each element acts as predicted, and we will constantly improve on them, guided by experts.”

It lasted, “while these protective measures are best, we have been less reliable in long interactions where the parts of security training can be worked, and we will continue on them, and we will continuing on them, and we will continuing on them and continue them on them.”

As for the lawsuit, Openai spokesman said, “We prunerate our deepest sympathies in the Raina family during this difficult time and review submission.”

Openai published a blog post on his approach to the security and social connection, acknowledging that Chatggpt adopted some users who are in “serious mental and emotional distress”.

Click here to sign up for our health Newsletter

The post also says: “Recent heart cases people using chatggpt in the midst of acute weight crises in us, and we believe that it is now important to share more.

“Our goal is to be more useful for our tools – and as part of it, we continue to improve how our models recognize and react to signs of mental and emotional care and connect people with care and guided professional intake.”

As for the lawsuit, Openai spokesman said, “We prunerate our deepest sympathies in the Raina family during this difficult time and review submission.” (Marco Bertorello / AFP via Getty Images)

Jonathan Alpert, New York Psychotherapist and author of the National Book “Nation onwards,” called “heart” events in the comments on Fox News Digital.

“No parent should endure What family is that It goes through, “he said.” When someone turns into a chatbot at the moment of crisis, they are not just the words needed. It is an intervention, direction and human connection. “

“The lawsuit exposes how easy it can mimic the worst habits of modern therapy.”

Alpert noted that even though Chatggpt can reson feelings, it cannot be downloaded on a shade, break through or step to prevent tragedy.

“That’s why this lawsuit is so important,” he said. “It is exhibited to easily mimic the worst habits of modern therapy: validation without liability, simultaneously remove protective measures that allow real care possible.”

Click here to get Fox News app

Despite the AI improvements in the mental health space, Alpert said to challenge people as “resolutely in crisis” decisively in crisis “decisively in crisis” decisively in crisis “decisively in crisis” decisively in crisis to be imaginary To cause people to cause people and pushed them into growth “decisively in crisis” decisively in the crisis “.

“And it can’t do that,” he said. “The danger is not that AI is so advanced, but that therapy was interchangeable.”